Stage 2: Rearrange Dice¶

In this stage, the task is to arrange a number of dice in a given, randomly generated pattern. See Task 2: Rearrange Dice for the general task description.

About the Dice¶

The dice are regular D6. They all look the same, so no distinction is made between the different dice.

Width: 22 mm

Weight: ~12 g

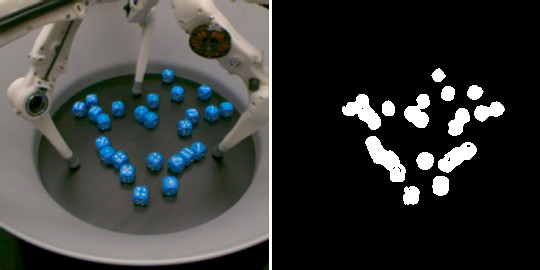

Detecting the Dice in the Camera Images¶

In this stage, we don’t provide a ready-to-use object tracking anymore, so you will have to find the dice yourselves, using the camera images. However, we do provide you with a function to segment pixels that belong to dice from the background (the same function is also used for evaluation):

-

trifinger_object_tracking.py_lightblue_segmenter.segment_image(image_bgr: numpy.ndarray) → numpy.ndarray¶ Segment the lightblue areas of the given image.

- Parameters

image_bgr – The image in BGR colour space.

- Returns

The segmentation mask.

Usage Example:

import cv2

from trifinger_object_tracking.py_lightblue_segmenter import segment_image

# load the image

img = cv2.imread("path/to/image.png")

# get the segmentation mask

mask = segment_image(img)

# show the mask

cv2.imshow("Segmentation Mask", mask)

cv2.waitKey()

Reward Computation¶

The reward is computed using

trifinger_simulation.tasks.rearrange_dice.evaluate_state(). It expects as

input a list of “goal masks” as well as a list of segmentation masks from the

actual scene.

Below is an example including the necessary initialisation of the

goal masks. Instead you may also use

the RealRobotRearrangeDiceEnv class of the example package

which implements this and provides a compute_reward method.

import pathlib

from trifinger_simulation.tasks import rearrange_dice

from trifinger_simulation.camera import load_camera_parameters

from trifinger_cameras.utils import convert_image

from trifinger_object_tracking.py_lightblue_segmenter import segment_image

CONFIG_DIR = pathlib.Path("/etc/trifingerpro")

# Initialisation

# ==============

# for the example use a new random goal

goal = rearrange_dice.sample_goal()

# load camera parameters

camera_params = load_camera_parameters(

CONFIG_DIR, "camera{id}_cropped_and_downsampled.yml"

)

# generate goal masks for the given goal and cameras

goal_masks = rearrange_dice.generate_goal_mask(camera_params, goal)

# Reward computation for one time step

# ====================================

# Input: camera_observation (as returned by TriFingerPlatform.get_camera_observation()

images = [convert_image(c.image) for c in camera_observation.cameras]

segmentation_masks = [segment_image(image) for image in images]

reward = -rearrange_dice.evaluate_state(goal_masks, segmentation_masks)

Format of goal.json¶

By default a random pattern will be sampled when you execute a job on the

robot. If you want to test specific cases, you can specify a fixed

pattern by adding a file goal.json to your repository (see

Goal Sampling Settings). For the task of this stage, the format of that

file has to be as follows:

{

"goal": [

[-0.066, 0.154, 0.011],

[-0.131, -0.11, 0.011],

[0.022, 0.176, 0.011],

...

]

}

Each element of the list corresponds to the (x, y, z)-position of one die. The

number of goal positions needs to match the number of dice (see

NUM_DICE).

Using the Real Robot¶

Changes Compared to Stage 1¶

Note that there are a few important differences compared to stage 1:

We don’t provide integrated object tracking for the dice. Due to this, the camera observations don’t contain an

object_poseanymore. Unfortunately, this means that different classes are needed the robot frontend and the log readers, see Robot Interface and Robot/Camera Data Files for more information.If you are using

trifinger_simulation.TriFingerPlatformfor local training/testing, you need to set theobject_typeargument toDICE:import trifinger_simulation platform = trifinger_simulation.TriFingerPlatform( visualization=True, object_type=trifinger_simulation.trifinger_platform.ObjectType.DICE, )