The TriFingerPlatform Class¶

The TriFingerPlatform class provides access to the

simulation of the TriFinger platform using the same interface as for the real

robot.

For a detailed explanation of the software interface on the real robot, and the logic behind it, please check the paper on the open-source version of the challenge robot (both have identical software).

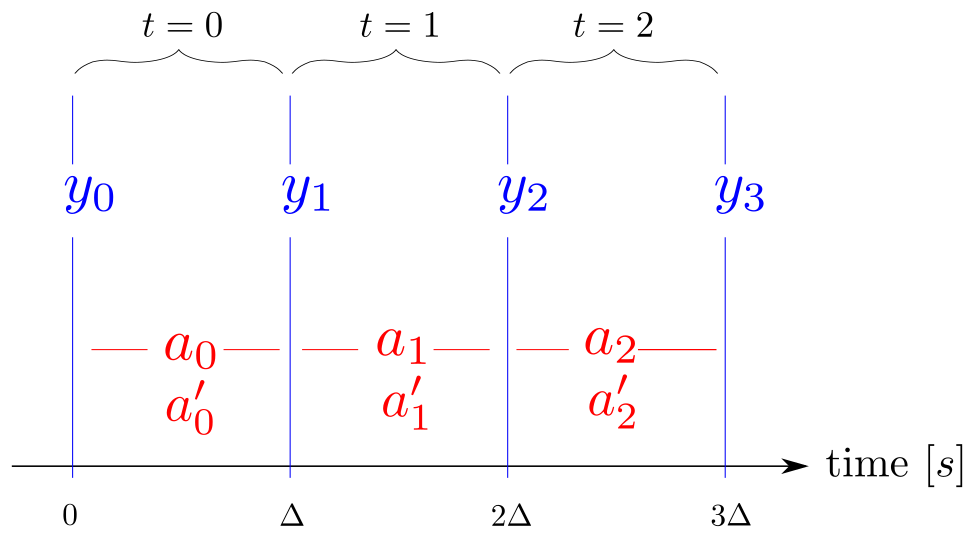

On the real robot, we are using time series for all robot data like actions

and observations. The user does not send actions directly to the robot but

appends them to the “desired actions” time series which serves as a queue.

At each time step, identified by a time index t, the action at position t is

taken from the “desired actions” time series and processed. At the same time an

observation is acquired from the robot and added to the “observation” time

series. This means that the effect of the desired action a_t is not yet

visible in the observation y_t as is illustrated below. (a'_t

corresponds to the applied action, see Desired vs Applied Action)

In the software, the method

append_desired_action() is used to

append actions to the time series. It returns the time index t at which the

appended action will be executed. Methods like

get_observation() expect a time index as

input and will return the data corresponding to this time step. If the given

time index refers to a point in the future, these methods will block and wait

until that point is reached.

This allows for very simple code that is automatically executed at the control rate of the robot:

platform = trifinger_simulation.TriFingerPlatform()

zero_torque_action = platform.Action()

t = platform.append_desired_action(zero_torque_action)

# get the first observation

observation = platform.get_robot_observation(t)

while True:

action = smart_algorithm_to_compute_next_action(observation)

t = platform.append_desired_action(action)

# The t given above refers to the moment the given action will be

# executed. Right now, this is in the future, so the following call

# will automatically wait until the action is actually applied to the

# platform

observation = platform.get_robot_observation(t)

As the simulation is not real-time critical, the behaviour is a bit different here:

append_desired_action()will directly apply the action and step the simulation.There is no actual time series. The API in the simulation follows the same principle to make the transition to the real robot easier. However, it is implemented with a buffer size of 1, so the getter methods only provide data for the current time step.

It is possible to access information from t + 1. In a typical gym environment, it is expected that the observation returned by

step(action)belongs to the moment after the given action is executed (this corresponds to the time index t + 1). To make it easier to get started, we therefore allow to access the observations of this time index in the simulation.

For more information on the API of the real robot (which will be used in the later phases of the challenge), see our publication TriFinger: An Open-Source Robot for Learning Dexterity.

Usage Example¶

#!/usr/bin/env python3

"""Simple demo on how to use the TriFingerPlatform interface."""

import time

import trifinger_simulation

if __name__ == "__main__":

platform = trifinger_simulation.TriFingerPlatform(visualization=True)

# Move the fingers to random positions

while True:

position = platform.spaces.robot_position.gym.sample()

finger_action = platform.Action(position=position)

# apply action for a few steps, so the fingers can move to the target

# position and stay there for a while

for _ in range(100):

t = platform.append_desired_action(finger_action)

# sleep after each step so that the visualization happens in real

# time

time.sleep(platform.get_time_step())

# show the latest observations

robot_observation = platform.get_robot_observation(t)

print("Finger0 Joint Positions: %s" % robot_observation.position[:3])

# the cube pose is part of the camera observation

camera_observation = platform.get_camera_observation(t)

cube_pose = camera_observation.object_pose

print("Cube Position (x, y, z): %s" % cube_pose.position)

Desired vs Applied Action¶

The action given by the user is called the desired action. This action may be

altered before it is actually applied on the robot, e.g. by some safety checks

limiting torque and velocity. This altered action is called the applied

action. You may use

get_applied_action() to see what action

actually got applied on the robot.

API Documentation¶

-

class

trifinger_simulation.TriFingerPlatform(visualization=False, initial_robot_position=None, initial_object_pose=None, enable_cameras=False, time_step_s=0.001, object_type=<ObjectType.COLORED_CUBE: 1>)[source]¶ Wrapper around the simulation providing the same interface as

robot_interfaces::TriFingerPlatformFrontend.The following methods of the robot_interfaces counterpart are not supported:

get_robot_status()

wait_until_timeindex()

-

__init__(visualization=False, initial_robot_position=None, initial_object_pose=None, enable_cameras=False, time_step_s=0.001, object_type=<ObjectType.COLORED_CUBE: 1>)[source]¶ Initialize.

- Parameters

visualization (bool) – Set to true to run visualization.

initial_robot_position (Optional[Sequence[float]]) – Initial robot joint angles

initial_object_pose – Only if

object_type == COLORED_CUBE: Initial pose for the manipulation object. Can be any object with attributesposition(x, y, z) andorientation(x, y, z, w). If not set, a default pose is used.enable_cameras (bool) – Set to true to enable rendered images in the camera observations. If false camera observations are still available but the images will not be initialized. By default this is disabled as rendering of images takes a lot of computational power. Therefore the cameras should only be enabled if the images are actually used.

time_step_s (float) – Simulation time step duration in seconds.

object_type (trifinger_simulation.trifinger_platform.ObjectType) – Which type of object to load. This also influences some other aspects: When using the cube, the camera observation will contain an attribute

object_pose.

-

Action(torque=None, position=None)¶

-

get_current_timeindex()¶

-

get_timestamp_ms(t)¶

-

get_desired_action(t)¶

-

get_applied_action(t)¶

-

get_robot_observation(t)¶ Get observation of the robot state (joint angles, torques, etc.). See

trifinger_simulation.SimFinger.get_observation().

-

get_camera_observation(t)[source]¶ Get camera observation at time step t.

Important

Actual images are only rendered if the class was created with enable_cameras=True in the constructor, otherwise the images in the observation are set to None. Other fields are still valid, though.

- Parameters

t (int) – The time index of the step for which the observation is requested. Only the value returned by the last call of

append_desired_action()is valid.- Returns

Observations of the three cameras. Depending of the object type used, this may also contain the object pose.

- Raises

ValueError – If invalid time index

tis passed.- Return type

Union[trifinger_simulation.trifinger_platform.TriCameraObservation, trifinger_simulation.trifinger_platform.TriCameraObjectObservation]

-

class

trifinger_simulation.trifinger_platform.ObjectType(value)[source]¶ Enumeration of supported object types.

-

NONE= 0¶

-

COLORED_CUBE= 1¶

-

DICE= 2¶

-

-

class

trifinger_simulation.trifinger_platform.ObjectPose[source]¶ A pure-python copy of trifinger_object_tracking::ObjectPose.

-

confidence¶ Estimate of the confidence for this pose observation.

- Type

float

-

orientation¶ Orientation of the object as (x, y, z, w) quaternion.

- Type

array

-

position¶ Position (x, y, z) of the object. Units are meters.

- Type

array

-

timestamp¶ Timestamp when the pose was observed.

- Type

float

-

-

class

trifinger_simulation.trifinger_platform.CameraObservation[source]¶ Pure-python copy of trifinger_cameras.camera.CameraObservation.

-

image¶ The image.

- Type

array

-

timestamp¶ Timestamp when the image was received.

- Type

float

-

-

class

trifinger_simulation.trifinger_platform.TriCameraObservation[source]¶ Python version of trifinger_cameras::TriCameraObservation.

This is a pure-python implementation of trifinger_cameras::TriCameraObservation, so we don’t need to depend on trifinger_cameras here.

-

cameras¶ List of observations of cameras “camera60”, “camera180” and “camera300” (in this order).

- Type

list of

CameraObservation

-

-

class

trifinger_simulation.trifinger_platform.TriCameraObjectObservation[source]¶ Python version of trifinger_object_tracking::TriCameraObjectObservation.

This is a pure-python implementation of trifinger_object_tracking::TriCameraObjectObservation, so we don’t need to depend on trifinger_object_tracking here.

-

cameras¶ List of observations of cameras “camera60”, “camera180” and “camera300” (in this order).

- Type

list of

CameraObservation

-

filtered_object_pose¶ Filtered pose of the object in world coordinates. In simulation, this is the same as the unfiltered object_pose.

- Type

-

object_pose¶ Pose of the object in world coordinates.

- Type

-